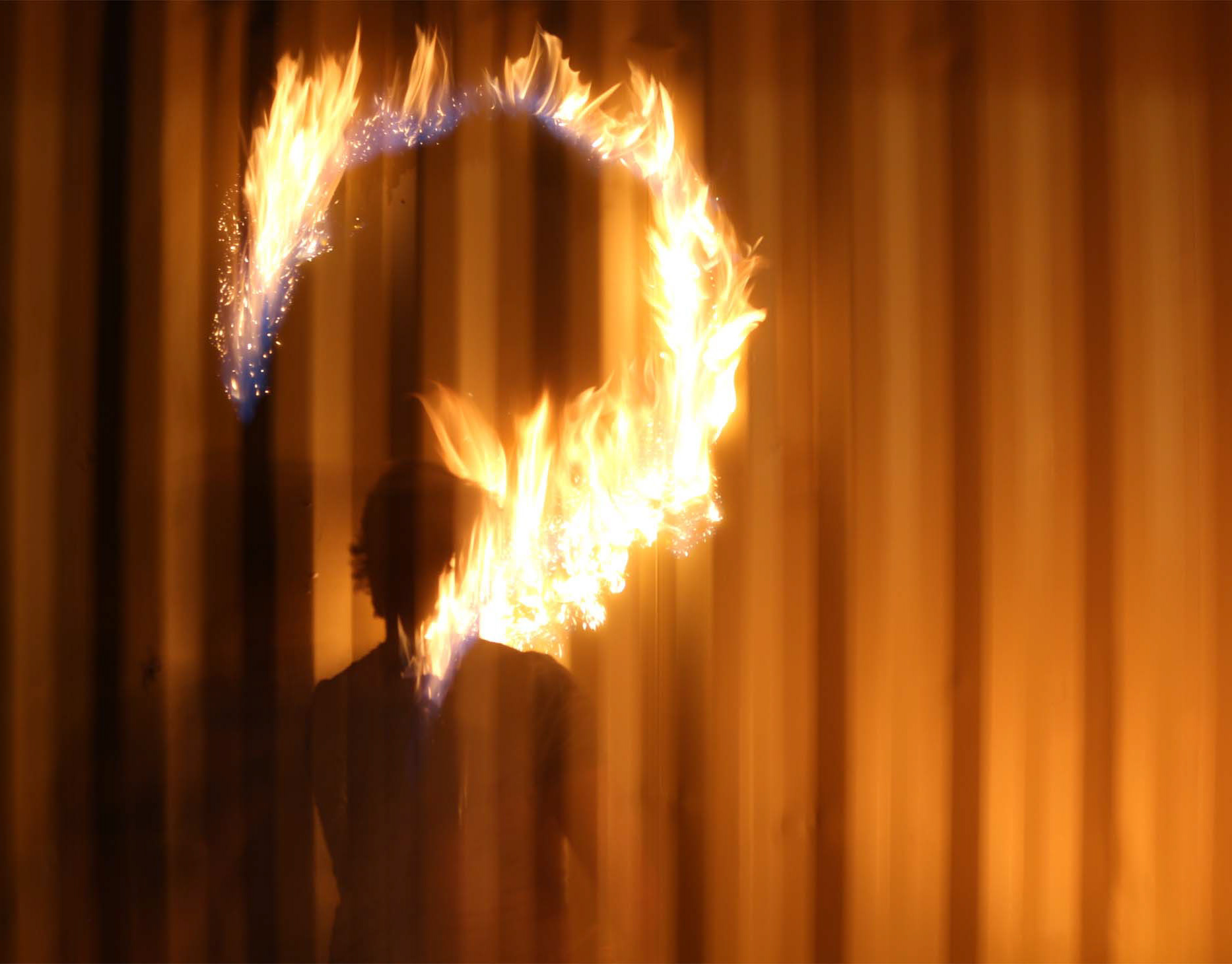

(Re)act is an exploration of a live performance setting and an investigation into how interactive technologies can enhance the connections created between performer and audience within small to medium sized venues. (Re)act uses the audience’s movements on a dance floor to generate the visuals of the performance, creating a collaborative experience blurring the divided between these parties allowing more of a uniquely intimate experience within this setting. At this point (re)act is a proof of concept, with further development, and opportunity, more uses of interactive technologies could be explored within this setting.

To interact with (re)act simply walk into the area observed by its sensors and watch as your movements light up the screen.

(Re)act uses a Microsoft Kinect to track a crowds movement and dance moves in a dark space and uses the data collected to visualise this as the visuals of a performance creating a collaborative experience between the performer and audience. It was all codded in Processing 3 over the course of 10 weeks and took many prototypes and play tests with friends to get the point where I wanted it.

This was a creation for my Honors year project where I wanted to explore the performance frame and use the information gathered throughout my degree to enhance this creative space.